High-Dynamic-Range Imaging with Modern Industrial Cameras

Published on May 7, 2018 by TIS Marketing.

While resolution and speed (frame rate) were the classic criteria when selecting a suitable industrial camera, sensitivity and dynamic range are becoming increasingly important - especially for cameras used in the automotive sector. In particular, real-world scenes with significant amounts of brightness variation (such as driving) benefit from the advantages offered by a sensor with a wide dynamic range. Take, for example, a car driving out of a tunnel into bright daylight: Sensors with a low dynamic range typically deliver images that are largely under- or overexposed which means a loss of detail (i.e. data) from these areas. If driver-assistance systems rely on this data, such a loss could prove fatal. Here it is absolutely necessary to realize the greatest dynamic range possible in order to acquire important detail in very bright as well as very dark areas.

Increasing Dynamic Range: Two Approaches

In order to increase the dynamic range of final images, basically two approaches are possible: hardware improvements to increase the dynamic range of the sensor and improvements via software algorithms.

The dynamic range of a CMOS sensor depends on the maximum number of electrons a sensor's pixels can hold until they are saturated (saturation capacity) and the dark noise of the pixel (i.e. the noise that occurs when reading out the charge). So to realize an increase in dynamic range, one can try to further reduce the dark noise or increase the saturation capacity. Whereas dark noise is dependent upon sensor electronics, an increase in the pixel's saturation capacity can be achieved either by larger pixels (since more pixel surface area means exposure to more photons thereby generating a greater charge), or by intrinsic improvements to pixel structure. Recently, Sony Pregius sensor technology in particular has impressively demonstrated with no changes in pixel size that improvements in pixel design with simultaneous reduction in dark noise can deliver a remarkable increase in dynamic range. Sony's IMX 265 Pregius sensor, for example, achieves a dynamic range of 70.5 dB at a pixel size of 3.45µm. The consequence of higher saturation capacity is an enlarged range of measurement that can be covered by a pixel. In order to suitably quantize this larger range, more than 8 bits are usually required for modern CMOS sensors; the Sony IMX 264 sensor, for instance, delivers a 12-bit quantized signal.

Improvements in Dynamic Range via Algorithms

In addition to improvements to the sensors themselves, dynamic range can also be algorithmically increased. These algorithmic improvements are based on image data acquired using different exposure times. Probably the most well-known method of this type uses "time varying exposure" (i.e. several complete images acquired with different exposure times) as the data basis. This method is now used in many smartphones and common image processing programs as well as in photography and is therefore known to a wide audience outside the machine vision market.

The basic assumption is that the final pixel values of a sensor are approximately linearly dependent on the incident light quantity and the exposure time, so that if the pixel is not saturated, the underlying incident light quantity (or a quantity proportional to it) is determined for a known exposure time. In the case of saturated pixels, the corresponding pixel values are used for shorter exposure times. In this way, the quantity of incident light can be determined for a larger area than would have been the case with only one exposure. The advantage of the exposure sequence is that the luminance can be determined over an enlarged range without any local resolution loss. Nevertheless, it is important to remember that multiple exposure times are necessary which can lead to unwanted artifacts - especially in the case of moving objects (e.g. ghosting).

Modern CMOS sensors such as Sony Pregius usually have multi-exposure functions to take native images with different exposure times without having to manually change the exposure time between shots.

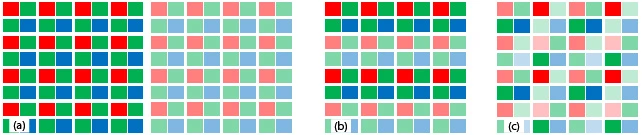

Spatially Varying Exposure

To avoid artifacts caused by multiple exposures, modern sensors offer "Spatially Varying Exposure" technology. This technique exposes certain groups of pixels on the sensor at different exposure times. A common variant, for example, alternately exposes two image lines using different exposure times. Since the exposures start simultaneously, artifacts caused by movement within the scene are minimized. However, in this case there is no 1:1 correspondence of differently exposed pixels and the pixels of the final HDR image must be calculated by interpolation. This process inevitably means a loss of resolution and can lead to artifacts - especially along edge structures. Furthermore, the calculation of the final image via the requisite interpolation is more computationally intensive than the data calculation from an exposure series.

Display of HDR Images and Tone Mapping

When displaying HDR images, one is often directly confronted with the (in comparison to human visual perception) small dynamic range offered by display devices. While HDR displays with a wider dynamic range are now available, they are still far from widespread. If an HDR image is to be displayed on a device with a lower dynamic range, its dynamic range must be reduced by a process called tone mapping. How the reduction is to take place is not clearly defined but depends on the desired goal. This can be, for example, the best possible approximation of the actual scene characteristics or the achievement of a certain subjective, artistic quality. Basically, a distinction is made between global and local tone mapping algorithms. In the case of global algorithms, the same transformation is performed for all pixels regardless of location which makes these algorithms very efficient and allows real-time data processing. Local algorithms act in local pixel neighborhoods and try, for instance, to maintain the best possible contrast in these neighborhoods. The local tone mapping algorithms are more CPU-intensive but usually deliver images with higher contrast.

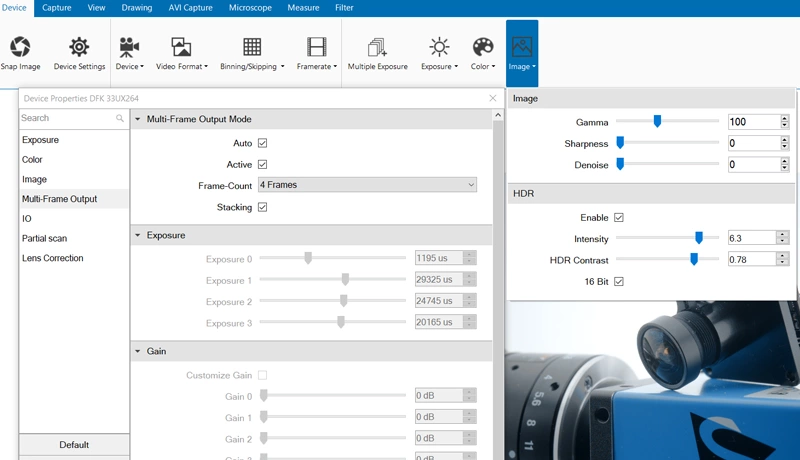

The Imaging Source long ago recognized the importance of maximum dynamic range for machine vision applications and so offers HDR image data acquisition as well as visualization or saving of data via tone mapping in its end-user software products and programming interfaces. Many programming hours were invested in creating user-friendly algorithms - resulting in automatic modes for the algorithms which automatically adapt all parameters to the scene and require no user intervention - delivering high-contrast shots with brilliant natural colors. In particular, when supported by the camera, the end-user software IC Measure uses HDR functionalities as standard and presents HDR images to the user.

The above article, written by Dr. Oliver Fleischmann (Project Manager at The Imaging Source), was published in the April 2018 edition (02 2018) of the German-language industry journal inspect under the title, "High-Dynamic-Range Imaging in modernen Industriekameras."